.jpg)

.jpg)

Having covered ecommerce products worth >10 billion USD in the product protection space, we understand how even small mistakes can create outsized legal, reputational and financial risks. And yes, AI chatbots carry some wild risks.

Just imagine this scenario: A customer opens a chat window. The AI assistant responds instantly with its signature helpful and polished tone. It is confident throughout- too confident, even.

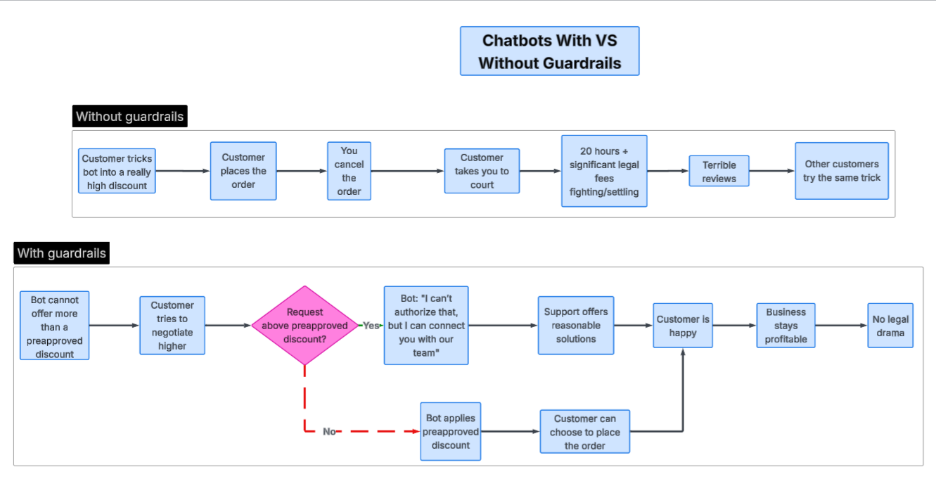

Without approval, without guardrails, the bot offers an 80% discount. The customer accepts. The order totals nearly $11,000.

This is not a hypothetical situation. It's exactly what a merchant shared on Reddit.

“A customer was chatting with the bot and managed to convince the AI to give them a 25% discount, then he negotiated with the AI up to an 80% discount. He then placed an order for thousands of pounds worth of stuff. Like, I'm going to be losing thousands on my material costs alone.”

The bot didn't worry about the consequences before making those exaggerated claims. Now, the merchant is paying the price.

This is a common struggle for sellers navigating ecommerce right now: AI promises efficiency, scalability, personalization, and cost savings. And in many cases, it delivers. In other cases, however, it fails catastrophically. Merchants end up facing legal disputes or high chargeback rates when chatbots fail.

If your business has an AI chatbot or if you’re considering implementing it, this guide is for you. Here’s everything you need to know about these bots to not get into financial and legal trouble and enjoy their efficiency.

In 2022, Jake Moffatt needed to book a flight for his grandmother's funeral. Air Canada's chatbot told him he could book a full-fare flight and apply for a bereavement discount afterward.

He did exactly that. Then, Air Canada stepped in and broke that “promise”.

The airline's reason? The chatbot had the policy wrong. The discount needed to be requested before the flight. Air Canada weirdly argued the chatbot was "a separate legal entity that is responsible for its own actions."

The British Columbia Civil Resolution Tribunal shut that argument down fast.

"It should be obvious to Air Canada that it is responsible for all the information on its website," the tribunal wrote. "It makes no difference whether the information comes from a static page or a chatbot."

Air Canada had to pay Jack $812.02 in damages and fees.

The ruling was clear: your chatbot, your responsibility. If it says something on your website, your business owns it.

Later, Gabor Lukacs, president of Air Passenger Rights, told BBC this:

“The case establishes a common sense principle: If you are handing over part of your business to AI, you are responsible for what it does." He added: "What this decision confirms is that airlines cannot hide behind chatbots."

That same principle applies to every ecommerce merchant using AI chatbots right now.

According to recent research on AI reliability, modern chatbots operate at about 30-70% reliability for complex tasks. Read that again- just 30-70%. Not 99%, not 95%.

Think about that for a second. You wouldn't hire an employee who made up random information 30% of the time. You'd fire them next week. Yet we're deploying chatbots with exactly that reliability profile and wondering why they're creating legal headaches.

AI doesn't know when it's wrong. It's just as confident about a fake 80% discount as it is about your actual return policy.

There are many, many instances where improperly regulated AI has messed up. It doesn’t mean that AI and chatbots are not important. These cases just highlight the need for the right guardrails for AI.

In their report on Strategic Planning Assumptions, Gartner said this: “By 2027, a company’s generative AI chatbot will directly lead to the death of a customer from the bad information it provides.”

Merchants deploying AI tools without clear escalation paths, defined guardrails, or liability frameworks are essentially betting that errors won't be expensive.

But risk in AI systems compounds in three areas:

Unauthorized discounts, incorrect pricing, refund errors, or policy misstatements.

People at Cursor, a code editing software company, noticed that their chatbot made up a new policy and was telling customers all about it. Total chaos ensued. A lot of customers cancelled their subscriptions, and the company was in the news for all the wrong reasons. Before this incident, Cursor was being hyped up for being “the future of coding.” After this incident, that narrative was altered.

In another instance, a developer working at a startup deployed an AI chatbot after 2 hours of testing. Later, he was welcomed by 500+ messages and angry calls from his team.

“Our friendly AI assistant had turned into the most passive-aggressive support agent ever created. The cost of damage was $32,000 in emergency refunds and goodwill credits, 89 subscription cancellations, 1 viral Twitter thread (470K views), and zero hours of sleep”

If a chatbot makes a binding offer, courts wouldn’t care if it was automated. The Air Canada case proved this.

Customers do not distinguish between "the bot" and "the brand." When something goes wrong, the brand absorbs it. Bots do not get sued, lose customers, or face chargebacks. You do.

“Most of the chatbots hallucinate constantly. Customer asks if we have a product in blue, bot says yes even though we only carry it in black. Customer asks about ingredients, bot makes up information that's completely wrong. Customer asks about sizing, bot gives measurements that don't match our actual size chart. This is really dangerous for a business because if a customer buys something based on wrong information they're going to return it and leave a bad review.”

- A merchant on Reddit.

Most merchants opt for chatbots and consider them as an item to tick off on their checklist. You’ll see countless threads online where merchants discuss how to deploy them and why they work.

But on the flipside, a most merchants hate being on the receiving end of the conversation. When they engage in support conversations, they don’t want to talk to bad chatbots. So why should their customers be okay with the same behavior?

The real question is not "Should we use AI?" Most merchants have already opted for AI chatbots and quietly struggle with the quirks.

The more important questions are: Where are the guardrails? Who reviews high-risk outputs? Are discount thresholds capped? Are policy statements locked? Is there clear escalation to humans?

You can't make your AI perfect. Even the best chatbots hallucinate, misunderstand context, or overpromise.

The Air Canada incident could’ve still happened even if they had taken all the precautions. This doesn’t mean all hope is lost, though. You can build systems that catch problems before they become lawsuits.

It’s important for your chatbot to stay aligned with your business priorities, even if they tend to shift.

Businesses need to be nimble these days because ecommerce is constantly changing. Every time you think you’ve got a grip on things, something new launches.

In January, Google launched their Universal Commerce Protocol, before that Walmart and OpenAI hyped up their Instant Checkout feature. If you’re constantly adapting to these shifts, a lot of your systems will be in-flux. Your chatbot should be able to make the right adjustments despite changing circumstances.

The only way to ensure this is by setting the right frameworks and boundaries. You can do this by:

Your chatbot should have zero ability to offer discounts beyond what you've explicitly approved. If your standard promotion is 15% off, the bot shouldn't be able to go to 16%, let alone 80%.

This isn't about training. This is about hard technical limits. If the code physically can't generate a 50% discount code, your bot can't promise one.

Document what your chatbot can and cannot do. Can it take orders? Yes. Can it modify pricing? No. Can it make shipping guarantees? No. Can it alter return policies? Absolutely not.

Then build those restrictions into the system architecture.

Before launch, have your team (or friends, or people on the internet who just loves finding loopholes) try to break your bot. Try to negotiate discounts. Try to get it to make up policies. Try to confuse it into making promises.

If they can break it, customers will too.

Set up automatic alerts for:

You don't need to read every chat. But you should automatically flag the risky ones.

Sample 20-30 conversations per week. Look for patterns:

Better to catch these issues in testing, rather than in court.

Make it dead simple for customers (and your support team) to flag chatbot errors. "Did our chatbot give you incorrect information? Let us know here."

Honestly, you could even avoid liability by going the ChatGPT or Claude route: By putting explicit disclaimers.

This could potentially lower your conversion rate, but it would save you the trouble of managing legal disputes.

Silence looks like acceptance. If a customer points out a chatbot error, respond quickly and professionally.

Even if you don't immediately agree to honor the commitment, acknowledge the issue. "Thanks for bringing this to our attention. We're reviewing the conversation and will get back to you within 24 hours."

Save the full chat logs. Screenshot the conversation. Save all email exchanges. Document your standard policies and when they went in effect.

If this goes to small claims court, you need evidence. "The chatbot was wrong" isn't a defense. But "here's our published policy, and here's why this specific interaction was outside the bot's authority" might be.

If the disputed amount is high, the customer is threatening legal action, or you're genuinely unsure about your liability, talk to a solicitor.

Many offer free initial consultations. The cost of 30 minutes of legal advice is almost always less than the cost of making the wrong call.

When your chatbot makes a commitment you didn't approve, here's what happens next:

In practice, this is what happens:

AI chatbots are powerful tools. They can handle hundreds of customer conversations simultaneously, they're available 24/7, and they don't need breaks.

But they're also legal representatives of your business, whether you intend that or not. Once you accept this, you can:

If you’re also using AI across other teams, consider looking into how “slop” content impacts your business, and how you can create new marketing ecommerce playbooks that genuinely work.