Trust has become the internet’s most overused word and also the most under-delivered promise.

Every platform says it’s “built on trust.” Every brand claims it’s “trusted by thousands.”

But somewhere along the way, the meaning got lost.

If you’ve ever been at the receiving end of an online review, you would have observed how platforms that once stood for transparency now host fake praise, fake outrage, and fake credibility, leaving merchants stuck in an identity crisis of what’s real and what’s not.

And yes, let’s be fair here. This isn’t a one-sided argument.

It’s not about merchants or customers. Yes, these platforms have benefits as well- helping customers make smarter choices, holding bad products accountable, and giving small businesses visibility. That’s all true, but not the central argument of this blog.

Truth is- there are barely any repercussions for bad actors. Someone can post a fake review, tank a rating, and walk away without consequence. A few angry sentences are all it takes to damage a business, and the person behind them may never face a problem for it.

It’s not too different from chargebacks. In both cases, the system is designed to protect consumers, and it should, but the weight of proof always falls on the merchant.

According to a 2021 study by Professor Saul Cavazos, fake reviews cost businesses $152 billion globally every year.

That’s not just a number; that’s thousands of merchants watching their reputation shatter overnight, knowing that several people behind those reviews probably never even bought from them or worse, it was written by an AI.

As much as review platforms claim to be transparent or unbiased, their business models don’t exactly work that way. The reviewer has almost nothing to lose, especially with the comfort of anonymity.

But for businesses, every bad review comes with a cost, the time, money, and sometimes even the subscription fees they have to pay just to have a fighting chance to defend their reputation.

Platforms like Trustpilot built their reputation on transparency, and now merchants say that’s exactly what’s missing.

On Reddit, you’ll find long threads filled with frustration: fake one-star reviews that never get removed, real ones that vanish overnight, and businesses claiming they’re punished faster than the trolls who target them.

Let that sink in for a second.

Imagine putting years into building something, your product, your team, your reputation and watching it all wobble.

One merchant observed:

“They’ve built a system that weaponises fake reviews to sell protection from the mess they refuse to clean up.”

Another added:

“It feels like the system is designed to create pain and then sell you the cure.”

Of course, Trustpilot says it’s fighting back, deleting millions of fake or harmful reviews, tightening verification, and using AI detection to keep the platform clean.

According to its latest transparency report, over 4.5 million fake or harmful reviews were identified in 2024 alone, with 90% caught automatically.

So yes, there’s truth on both sides.

Every situation online has the good, the bad, and the ugly. Some reviews are real. Some are manipulated. Some are flat-out malicious.

Let’s go deeper into the core tenets in the system that are breaking.

At its simplest, a review platform should prove one thing, that the person leaving feedback actually bought the product or used the service.

That’s why systems like Amazon’s “Verified Purchase”, Google’s anti–review-gating policy, and Yelp’s strict ban on incentivized reviews exist in the first place.

UK’s Competition and Markets Authority found tens of thousands of fake accounts being sold to merchants on Amazon and Google, each capable of posting multiple verified reviews before detection.

Even when platforms act, it looks like damage control, not prevention.

On top of that, AI is being used to create fake reviews at scale, and the same AI is being trained to catch them.

It’s turned into a cat-and-mouse cycle where machines are fighting machines, each trying to outsmart the other.

As Reddit’s CEO, Steve Huffman, recently put it, the fight against AI-generated content has become an “arms race."

And as a merchant, your business depends on that fight. You just don’t have any control over it.

I mean, if machines are now deciding what’s real, what happens to context?

A genuine customer using slang or sarcasm can look “suspicious” to an algorithm, while a fake five-star written by a bot reads perfectly normal.

In theory, moderation is supposed to keep the system fair – flag what’s false, protect what is real and give both parties a chance to explain.

But at scale, it doesn’t feel fair anymore. It feels like automation with a PR team.

Ask any merchant and you’ll hear the same story: fake reviews linger for weeks, while genuine ones vanish overnight. And when you try to ask why, you’re handed a help-center link or a templated reply that explains nothing.

Even shoppers are starting to feel it.

In a recent Reddit discussion, one user wrote,

“You see five stars and still have to cross-check Reddit, YouTube, or ask friends before buying anything.”

“To make reviews trustworthy again, platforms would need serious verification, like only allowing reviews from confirmed buyers, using timestamps, maybe even flagging AI-written stuff.

They continued-

But as long as clicks = money, I don’t think they’ll fix it unless users start leaving.”

When both merchants and customers stop believing the same signal, it’s not a moderation issue anymore, it’s more like credibility collapse.

Let’s step out of theory for a second.

In October 2025, Lars Lofgren, co-founder of Stone Press, published a report.

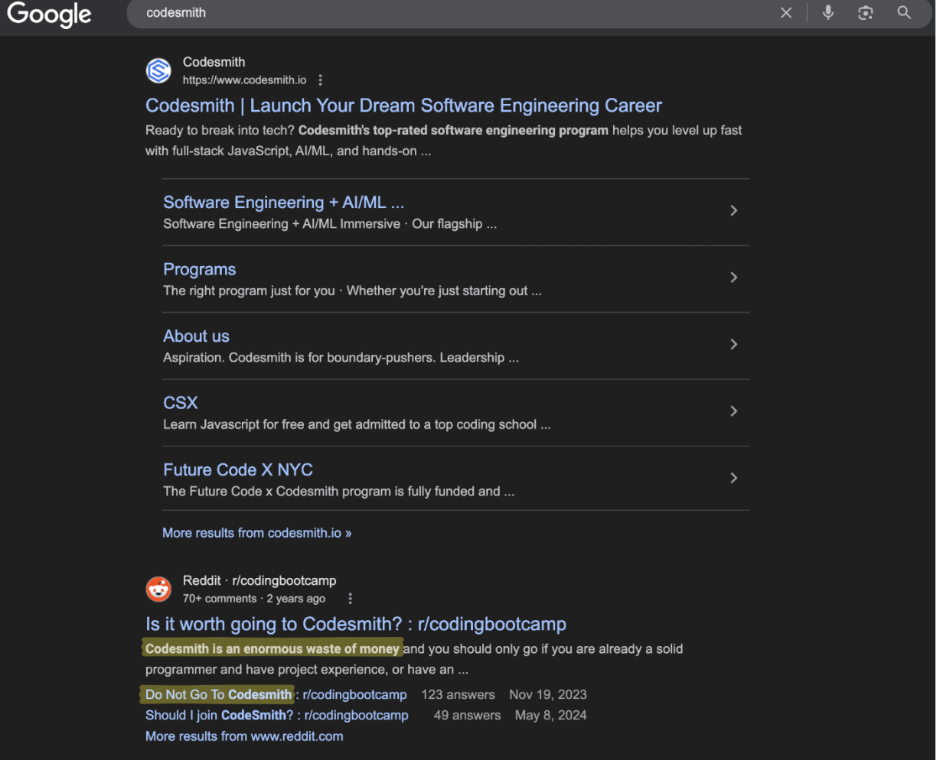

According to his report, a moderator of the subreddit r/codingbootcamp; who also co-founded a rival bootcamp, allegedly used their mod privileges to shape narratives, delete rebuttals, and flood Google with negative Reddit threads about Codesmith, a once-thriving coding school.

Within months, those same threads were ranking second only to Codesmith’s own website on Google and later began appearing in LLM-generated answers. The company reportedly lost nearly 40% of its revenue to reputational damage alone.

The lesson isn’t just about Reddit.

It’s about what happens when moderation becomes a marketplace weapon, when a single person, algorithm, or platform setting can tilt the public narrative faster than truth can catch up.

And until accountability cuts both ways, for reviewers, moderators, and the systems themselves, “trust” will keep getting traded like ad inventory.

If a fake review can wreck a business, there should be consequences for whoever posted it.

Well! You know, that’s not how the internet works.

The system’s weight leans almost entirely on merchants. Platforms love to talk about “community integrity,” but read through Reddit or merchant forums and you’ll hear a different story.

One store owner wrote:

“Someone left a review that was clearly fake, wrong product name, wrong order number. I flagged it, sent screenshots proving they weren’t a customer, and Trustpilot still said, ‘we reviewed it and it stays.”

Meanwhile, when I replied to defend my business, they flagged my response for violating guidelines because I said “this person never ordered from us.”

So yes, there are consequences in the system. But they’re structurally asymmetric:

Yelp’s latest transparency report shows it placed over 547 compensated activity alerts and suspicious review activity alerts on business pages in 2024.

To be fair, platforms are trying. These alerts are meant to flag suspicious activity, to keep shoppers safe and manipulation in check. What about the honest merchants caught in that net; it’s a public scar that’s hard to explain.

But if merchants have to fight bots, anonymous profiles, and vague policies just to prove they’re real, while fake reviewers almost face no consequence, then really- where’s the balance?

So,

If there’s one thing merchants have learned the hard way, it’s this; trust doesn’t really live online.

Every new system that promises transparency eventually becomes a filter you can game, a feed you can hijack, or a policy you can’t appeal. And every fix so far like AI moderation, verification badges, “trusted seller” labels, all still treats the symptom, not the disease.

Because the real question isn’t “how do we stop fake reviews?”

It’s “who gets to define what’s real?”